SOD: Sidebar Diversion

I couldn’t get the idea out of my head that the Avatar rendering cluster required 1 petabyte of storage. However, this slide show of the facilities used for filming the actors opened my eyes. [eye opening slide show]

The petabyte is required not just for the finished product. It’s needed to store all the sensor and camera data as well. Okay. I accept that Weta needed 1PB. How does one go about creating a petabyte storage facility? What are the tradeoffs? How much does it cost to build and then to maintain?

I need to get this out of my head and free up some brain cycles to continue with my Seeds of Discontent series. This article is a sidebar.

Disclaimer: I’m using a server build derived from the Seeds of Discontent serial (see also, Seeds of Discontent: System Benchmarking Hardware). It isn’t a traditional server room server but it serves as a baseline talking point. Besides, I can get budgetary pricing off newegg.com. It’s good enough for the purposes of this discussion. I’m sure the staff at Weta (or ILM or Dreamworks or Pixar) would have more insight into what *really* works.

I’ll choose the 4U chassis for this exercise since it better approximates the airflow of a desktop chassis. The 50U rack is taller than usual but it allows for 12 4-U servers plus a switch.

In the Seeds of Discontent serial, the HDD array uses the cheapest drives available; The final size is not important. In this sidebar discussion, final HDD capacity *is* important. This is not a single desktop box but a compute and storage cluster.

Each server functions as a compute unit as well as a storage unit.

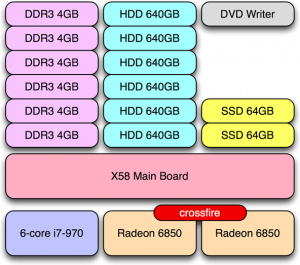

A commodity multi-core CPU together with two commodity PCI Express GPU subsystems comprise the compute unit. Primary storage and swap for the compute unit is a two-disk RAID 0 SSD. The GPU cards have their own RAM while the CPU has two triple-channel banks of DDR3.

The server also hosts an HDD array which is not private storage but part of a larger storage cluster. For this exercise, I arbitrarily choose GlusterFS.

I’ve budgeted $2,500 USD per server (less the HDD array).

(circa mid-November 2010)

case+PSU $ 200

mainboard 230

SSD 135

SSD 135

DDR3 24GB 550

CPU 850

GPU 200

CPU 200

--------------------

$25,000 USD

$2,500 USD

In addition, I’ve budgeted $1,000 per rack and $1,000 per switch. A fully constructed rack sans HDD is $32,000. YMMV.

rack $ 1,000

switch 1,000

servers 30,000 (2,500 * 12 servers/rack)

--------------------

$32,000 USD

Let’s add the HDD to build out a petabyte cluster. Since I’m using the Asus Rampage III (admitedly not a server mainboard), the two GPU fully consume the PCI Express lanes. There aren’t any lanes left for a RAID card. The two SSD drives occupy the two SATA III channels leaving the seven SATA II channels for the HDD array. Each server then adds seven HDD (and each rack adds 84 HDD) to the cluster.

As seen from this list (circa mid-November 2010), the lower capacity drives are not the cheapest drives per gigabyte.

SKU $USD cnt GB $/GB $K/PB HDD/PB --------------- ---- --- ---- ----- ----- ---- WD5002ABYS-20PK 1750 20 500 0.175 175 2000 WD7502ABYS-20PK 2500 20 750 0.167 167 1333 0A39289-20PK 2700 20 1000 0.135 135 1000 WD7501AALS-20PK 1550 20 750 0.103 103 1333 WD6401AALS-20PK 1300 20 640 0.102 102 1563 WD5000AADS-20PK 900 20 500 0.090 90 2000 0F10381-20PK 900 20 500 0.090 90 2000 WD1001FALS-20PK 1800 20 1000 0.090 90 1000 WD5000AAKS-20PK 880 20 500 0.088 88 2000 WD6400AARS 55 1 640 0.086 86 1563 WD7500AADS-20PK 1250 20 750 0.083 83 1333 ST3500418AS 40 1 500 0.080 80 2000 WD7500AADS 55 1 750 0.073 73 1333 WD20EVDS-20PK 2800 20 2000 0.070 70 500 0F10383-20PK 1400 20 1000 0.070 70 1000 WD10EALS-20PK 1350 20 1000 0.068 68 1000 WD10EARS 65 1 1000 0.065 65 1000 WD10EARS-20PK 1200 20 1000 0.060 60 1000 WD15EARS-20PK 1700 20 1500 0.057 57 667 ST31000528AS 50 1 1000 0.050 50 1000 ST31500341AS 60 1 1500 0.040 40 667

Downselect the cheapest drive at each capacity point greater than or equal to 1TB.

SKU $USD cnt GB $/GB $K/PB HDD/PB --------------- ---- --- ---- ----- ----- ---- WD20EVDS-20PK 2800 20 2000 0.070 70 500 ST31000528AS 50 1 1000 0.050 50 1000 ST31500341AS 60 1 1500 0.040 40 667

I want to minimize the number of drives while minimizing costs. The Seagate 1TB drive is both more expensive per GB and requires more drives per PB than the Seagate 1.5TB drive. It is immediately eliminated. The competition is between the Western Digital 2TB and the Seagate 1TB drive.

If cost were the only issue, then the Seagate drive would win. If drive count were the only issue then the Western Digital drive would win. To get closer to an answer, let’s build out the storage cluster.

Petabyte Cluster with Just a Bunch of Disks

======= count ====== ========= cost ==========

Drive HDD Servers Racks HDD Rack Total

----- ----- ------- ----- ------- ------- -------

2.0TB 500 72 6 70,000 192,000 262,000

1.5TB 667 96 8 40,000 256,000 296,000

Even though the 2TB drives cost $30,000 more than the 1.5TB drives, the total cluster cost is $34,000 for the 1.5TB drive choice. Furthermore, there is no redundancy to protect against drive failure. How likely is a drive to fail? It’s not just likely to happen. It will happen. If a single drive will on average fail in five years, then a single drive in a pool of 500 drives will on average fail in 1/100 of a year (or roughly two drives a week).

Without debate, I posit that it is not possible to make nightly backups of a petabyte storage cluster. Firstly, we’d need a second petabyte. Secondly, that’s a lot of data to move and the cluster needs to continuously run compute jobs (rendering). The solution is redundancy either through local machine (e.g., RAID 6) or through GlusterFS replication (analogous to RAID 10 but at the cluster level).

Petabyte Cluster with RAID-6

======= count ====== ========= cost ==========

Drive HDD Servers Racks HDD Rack Total

----- ----- ------- ----- ------- ------- -------

2.0TB 700 100 8.3 98,000 268,000 366,000

1.5TB 1,087 156 13 56,000 416,000 472,000

Petabyte Cluster with RAID-10

======= count ====== ========= cost ==========

Drive HDD Servers Racks HDD Rack Total

----- ----- ------- ----- ------- ------- -------

2.0TB 1,000 144 12 140,000 384,000 524,000

1.5TB 1,334 292 16 80,000 512,000 592,000

The drive count starts to really add leading to more frequent drive failures. With a RAID 10 (or the equivalent GlusterFS replication scheme), the operations team can expect to replace five to six drives per week.

But the larger question is, how many servers are needed for the compute cluster? What if rendering needed no more than 60 compute nodes? If we fixed the compute nodes count to 60, we would need to add more drives per server. For the sake of discussion, assume we could load 24 drives per server but that doubles the cost per server before including drive costs (i.e., 2 * $2,500 = $5,000 per server sans HDD).

Furthermore, assume we’re using GlusterFS replication for the storage cluster redundancy. This presses the drive count up but lowers the complexity of building and maintaining local RAID systems each with 24 drives.

Petabyte 60 Server Cluster with RAID-10

======= count ====== ========= cost ==========

Drive HDD Servers Racks HDD Rack Total

----- ----- ------- ----- ------- ------- -------

2.0TB 1,000 60 5 140,000 160,000 300,000

1.5TB 1,334 60 5 80,000 160,000 240,000

There is a $60,000 capital cost difference between the two clusters. Drive failure rates are a third lower for the 2TB drive cluster but the larger drive costs more per GB.

Drive Failure Rate

(5 Year time to fail)

Petabyte 60 Server Cluster with RAID-10

== failure cost == HDD failrate === month ===

Drive HDD labor subtot units (days) fails cost

----- --- ----- ----- ----- -------- ----- ------

2.0TB 140 70 $ 210 1,000 1.825 16.4 $3,518

1.5TB 60 70 130 1,334 1.368 21.9 2,851

These number presumed that both drives had the same MTBF. Digging a bit further we find that the WD20EVDS claims 1 million hours MTBF and the ST31500341AS claims 750,000 hours MTBF. That is, the operations staff can expect the Seagate drives to fail at a rate 1/3 greater than that of the Western Digital drives.

Sidenote: The two drives are in different classes. The Seagate drive spins at a faster rate (7,200 RPM) and claims performance. The slower Western Digital drive (5,400 RPM) claims consistency and lower power. However, the slower rate is fine for the storage cluster which acts as secondary storage.

I will not attempt to sort the apples to oranges comparisons between the two drive manufacturers. I shall take my previous calculations and adjust the 1.5TB drive cost up by a third.

Drive Failure Rate

(Adjusted Fail Rate)

Petabyte 60 Server Cluster with RAID-10

original adjusted

fail HDD === month === === month ===

Drive cost units fails cost fails cost

----- ----- ----- ----- ------ ----- ------

2.0TB $ 210 1,000 16.4 $3,518 16.4 $3,518

1.5TB 130 1,334 21.9 2,851 29.2 $3,801

A reversal of recurring costs. Does it matter? No. Not really. In my opinion, it’s more important to minimize the day-to-day operation hassles. The three hundred bucks a month (one way or the other) is noise. The $60K difference in initial capital costs is significant but not as significant as reliable operations.

Rumor has it that both Seagate and Western Digital will soon release a 3TB drive.

Update 2010-12-17: Xbit Labs reports on Hitachi’s new sixth-generation perpendicular magnetic recording (PMR) which “enable 3.5″ hard drives with 4TB or even 5TB capacities.”

My final ponderings on this fantasy cluster looks at the impact of future HDD capacities. For this, I simply speculate on the cost per gigabyte. If you have pricing information more closely tied to reality, please let me know. 🙂

Drive Failure Rate

(unadjusted 5year rates)

Petabyte 60 Server Cluster with RAID-10 (or equivalent)

$/GB == failure cost == HDD failrate === month ===

Drive HDD labor subtot units (days) fails cost

----- ----- --- ----- ----- ----- -------- ----- ------

5.0TB 0.100 500 70 570 400 4.564 6.6 $3,762

4.0TB 0.090 360 70 430 500 3.651 8.2 3,526

3.0TB 0.080 240 70 310 667 2.737 11.0 3,410

2.0TB 0.070 140 70 210 1,000 1.825 16.4 3,518

1.5TB 0.040 60 70 130 1,334 1.368 21.9 2,851

This isn’t the end of the tradeoff line. At some point, 3.5 inch HDD will yield to the 2.5 inch form factor. The larger larger drives just won’t be available. That dynamic will change the equation. GPU cards will become increasingly more capable. CPU core counts will increase. RAM costs decline. Fewer servers will be needed. Fewer racks. Less power. I’m sure in my naïveté I’ve underestimated much here. However, I do believe one day the entire data center used to build Avatar will fit inside a 40 foot shipping container. And then, some time later–but not much later–that compute power will shrink to fit in a 20 foot container. And so on. The important point is that the capital costs (exluding facilities) for this fantasy cluster is under a million dollars. And it gets cheaper by the day.